Autonomous Vehicles

Autonomous Vehicles have long been viewed as the logical next monumental breakthrough in engineering. A fantastical feat that has been depicted throughout Hollywood and analyzed by many journals; autonomous vehicles are one of the most highly scrutinized potential breakthroughs of this decade. There are 6 levels of autonomy which represent a progressive pathway to level 5 – full autonomy. So, this begs the questions: How long until we reach complete autonomy? What level of autonomy are we at now? And how is it accomplished? First, let’s get a clear picture of what each level of autonomy entails.

Levels of Autonomy

Level 0

This level represents a complete lack of autonomy – manually controlled vehicles. The human is responsible for the “dynamic driving task”, but there can be a few systems in place to aid the driver. The “dynamic driving task” refers to the real time operational and tactical functions required to operate a vehicle in on-road traffic. Systems that do not “drive” the vehicle, such as an emergency braking system or standard cruise control, do not qualify as autonomy and are therefore considered level 0 systems. Many vehicles that are on the road today operate at level 0 autonomy.

Level 1

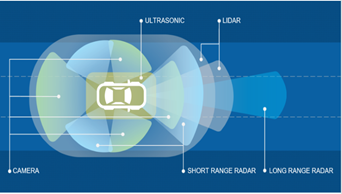

The lowest level of automation, level 1 autonomous vehicles feature a single automated system that provides the driver assistance. Adaptive cruise control, where the vehicle can be guided and kept at a safe distance behind the next car, is a popular example of level one autonomy. These systems vary, but typically use some combination of LiDAR, radar, or camera to automatically accelerate or brake to keep the vehicle at a safe distance from the vehicle ahead. Many newer car models that have implemented this system qualify as level 1 autonomous vehicles.

Level 2

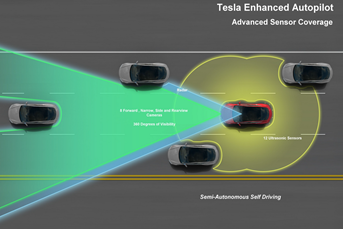

Level 2 automation dictates the use of an advanced driver assistance system (ADAS). An ADAS can control both the steering and the acceleration or deceleration of the vehicle. Using a human-machine interface, an ADAS improves the driver’s ability to react to dangers on the road through early warning and automation systems. An ADAS utilizes a series of high-quality sensors, cameras and LiDAR to provide 360-degree imagery, 3D object resolution, and real-time data. Some other examples of ADAS include: Anti-lock brakes, forward collision warning, lane departure warning, and traction control. Some currently implemented examples of level 2 autonomy include Tesla Autopilot and Cadillac Super Cruise systems.

Level 3

Vehicles that have environmental detection capabilities and can make informed decisions themselves are considered level 3 autonomous. While having these features, these vehicles still require human intervention if unable to execute a task. The Audi A8L was set to hit the road with level 3 autonomous technology. It’s Traffic Jam Pilot combines a lidar scanner with advanced sensor fusion, processing power, and built-in redundancies. That being said, global regulators had yet to agree on an approval process for level 3 vehicles, forcing Audi to abandon its level 3 hopes. This was until the Honda Legend got the worlds-first approval for level 3 autonomous vehicles in Japan with its own Traffic Jam Pilot automated driving equipment.

Level 4

The main improvement between level 3 and 4 is that level 4 vehicles can intervene themselves if things go wrong or there is a system failure. With this ability, these cars do not require human intervention in most situations. However, the human still has the option to manually override the vehicle. In the future, level four vehicles are expected to be used for ride-share and public transportation purposes.

Level 5

Perhaps the hottest topic in engineering, level five vehicles have full driving automation and do not require human attention. These vehicles will not have typical driving components such as steering wheels or pedals. While there has been testing for level five vehicles, none have been available to the general public.

Recently, the timetable for level five autonomy has become murky with many automakers backing off on their claim of having level five vehicles available between 2018 and 2025. With very few cars approved for public level three autonomy, it seems likely that there is still room for innovation, and it will likely take a decade before level five automation is achieved.

Sensor Fusion in Autonomous Vehicles

Sensor Fusion

Sensor fusion is utilized in a variety of systems that need to output estimated data based on the raw data from multiple sensors. Sensor fusion is the ability to bring together multiple sensor inputs to produce a single result that is more accurate than that of the individual inputs alone. A popular example of sensor fusion is the Kalman Filter. A Kalman filter utilizes a series of observed measurements over time, filters out naturally occurring statistical noise and other inaccuracies, and produce a weighted estimate that is more accurate than data from the sensors alone. To learn more about Sensor Fusion and the Kalman Filter, check out our white paper here.

Sensor Fusion in Autonomy

Advanced Driver Assistance Systems (ADAS)

Many present vehicles with ADAS utilize information from different sensors such as radar, optical cameras, LiDAR, or ultrasound. Sensor fusion can be used to combine information from these sensors to provide a clearer view of the vehicle’s environment and is integral in the advancement of these systems. A common example of sensor fusion in ADAS is the fusion of information between a front camera and radar. Alone, both sensors have issues in environmental detection. A camera has problems in conditions such as rain, fog, and sun glare, but is a reliable source of color recognition. Radar is useful for detecting object distance but is not good at recognizing features like road markings. Sensor fusion between a camera and radar is found in Adaptive Cruise Control (ACC) and Autonomous Emergency Braking (AEB) These are a couple examples of ADAS that are found in many newer car models.

Autonomous Vehicles

As noted earlier, a major advantage of sensor fusion in the frame of autonomous vehicles is that the combined data from various sensors can overcome the environmental shortcomings of individuals sensors. As a result, this reduces false negatives and false positives, while increasing the overall performance and reliability of the system. The importance of sensor fusion is highlighted most in the path planning aspect of autonomous vehicles. Here, sensor readings are integrated to provide precise analysis of the vehicle’s state and predict the trajectories of the surrounding objects. This allows the vehicle to perceive its environment more accurately, as the noise variance of a fused sensor is smaller than the variance of individual sensor readings.

Inertial Labs Involvement In Autonomous Vehicles

Inertial Labs INS-D in Autonomous Navigation

The Inertial Labs INS-D is an Inertial Navigation System (INS) that uses data from gyroscopes, accelerometers, fluxgate magnetometers, a dual antenna GNSS receiver, and a barometer. This data is fed into an onboard sensor fusion filter to provide accurate position, velocity, heading, pitch, and roll of the vehicle under measure. The INS-D, fused with aiding data from LiDAR, optical cameras, or Radar, can provide the necessary data for object detection or simultaneous localization and mapping (SLAM) algorithms. For example, the INS-D, combined with LiDAR and optical camera data, can produce georeferenced and time stamped data for vehicle navigation that is accurate within a few centimeters. Georeferenced data is data that is related to a geographic coordinate system, so this data has a precise location in physical space, which is crucial for mapping a vehicle's environment. Additionally, sensor fusion of the optical camera allows for this data to be colorized. This means that the camera images are projected over the georeferenced LiDAR data, so each point is colorized to reflect the color of the point in the real world. This is especially important for object detection and feature recognition such as road lines.

.png?width=641&name=Screenshot%20(31).png)

Real World Applications

Virginia Tech

Inertial Labs has partnered with the Autonomous Systems and Intelligent Machines (ASIM) lab in the Mechanical Engineering department of Virginia Tech to conduct research on Connected and Autonomous Vehicles (CAV). Students are using the INS-D, along with other various sensors, to convert a hybrid sedan into an autonomous car. The ASIM lab was established with the purpose of conducting research on autonomy from a dynamical systems and intelligent controls perspective. Through a combination of sensor fusion techniques, connectivity through communications, and advanced learning algorithms, the ASIM lab has developed intelligent machines such as autonomous or semi-autonomous robots and vehicles.

Robotics Plus

Robotics Plus have developed a line of unmanned ground vehicles (UGV) for precision agriculture applications. Inertial Labs’ Inertial Navigation System, fused with optical aiding data, can produce real-time georeferenced data. When fed into a robust algorithm, this allows the UGV to be aware of its surroundings. Designed due to the demand to mechanize orchard and horticultural tasks, these UGVs are continuously being developed to be applied to a range of applications in varying environments. Robotics Plus was made with the purpose of solving the increasingly pertinent agricultural challenges of labor shortages, crop sustainability, pollination gaps, and yield security. An award-winning innovative company, Inertial Labs is proud to work with Robotics Plus in their continued goals of improving grower experience and their yield security.

Pliant Offshore

Pliant Offshore are experts in offshore measurement and control combined with 3D software technology. Inertial Labs has worked with Pliant Offshore to aid them in their goals of producing accurate products that can withstand harsh environments and produce precise results. Inertial Labs' inertial technology is used on their unmanned surface vessels (USV) to track and trace assets, review asset history, and monitor wear, usage, and lifetime of assets. USVs can use Inertial Labs' Motion Reference Units (MRU) fused with a multi-beam echo sounder (MBES) to inspect assets with accurate, georeferenced data and monitor these assets over time. With a mission of developing and improving products to maximize time-efficiency, cost-effectivity, and safety for end-users, Inertial Labs is excited to continue working with Pliant Offshore.

What Do You Think?

What Do You Think?

Here at Inertial Labs, we care about our customers satisfaction and want to continuously be able to provide solutions that are specifically tailored to problems that are occurring today, while vigorously developing products to tackle the problems of tomorrow. Your opinion is always important to us! Whether you are a student, an entrepreneur, or an industry heavyweight. Share your thoughts on our products, recommendations you have, or just say hello at opinions@inertiallabs.com.

Trademark Legal Notice: All product names, logos, and brands are property of their respective owners. All company, product and service names used in this document are for identification purposes only. Use of names, logos, pictures, units and brands does not imply endorsement of Pliant Offshore, Robotics Plus, Virginia Tech, Audi, Honda, Cadillac, and Tesl